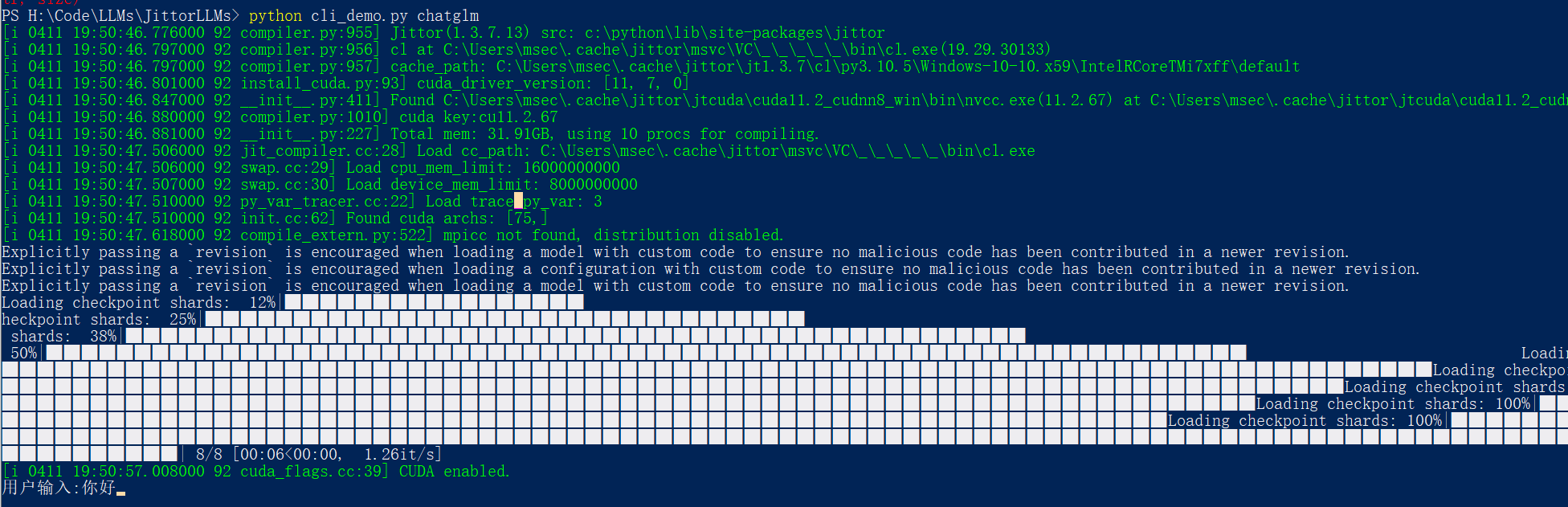

运行

python cli_demo.py chatglm

输入你好

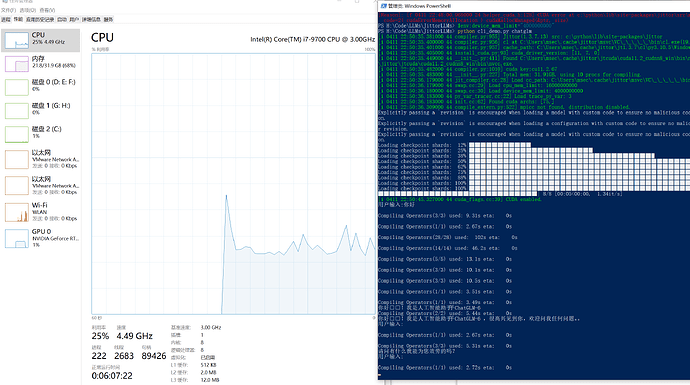

错误日志如下:

) -> C:\Users\msec/.cache\huggingface\modules\transformers_modules\local\modeling_chatglm.py:973(forward) -> C:\python\lib\site-packages\jtorch\nn\__init__.py:16(__call__) -> C:\Users\msec/.cache\huggingface\modules\transformers_modules\local\modeling_chatglm.py:635(forward) -> C:\python\lib\site-packages\jtorch\nn\__init__.py:16(__call__) -> C:\Users\msec/.cache\huggingface\modules\transformers_modules\local\modeling_chatglm.py:537(forward) -> C:\Users\msec/.cache\huggingface\modules\transformers_modules\local\modeling_chatglm.py:174(gelu) -> C:\Users\msec/.cache\huggingface\modules\transformers_modules\local\modeling_chatglm.py:169(gelu_impl) -> }

[e 0411 19:54:06.551000 92 mem_info.cc:101] appear time -> node cnt: {3:620, }

Traceback (most recent call last):

File "H:\Code\LLMs\JittorLLMs\cli_demo.py", line 9, in <module>

model.chat()

File "H:\Code\LLMs\JittorLLMs\models\chatglm\__init__.py", line 36, in chat

for response, history in self.model.stream_chat(self.tokenizer, text, history=history):

File "C:\Users\msec/.cache\huggingface\modules\transformers_modules\local\modeling_chatglm.py", line 1259, in stream_chat

for outputs in self.stream_generate(**input_ids, **gen_kwargs):

File "C:\Users\msec/.cache\huggingface\modules\transformers_modules\local\modeling_chatglm.py", line 1336, in stream_generate

outputs = self(

File "C:\python\lib\site-packages\jtorch\nn\__init__.py", line 16, in __call__

return self.forward(*args, **kw)

File "C:\Users\msec/.cache\huggingface\modules\transformers_modules\local\modeling_chatglm.py", line 1138, in forward

transformer_outputs = self.transformer(

File "C:\python\lib\site-packages\jtorch\nn\__init__.py", line 16, in __call__

return self.forward(*args, **kw)

File "C:\Users\msec/.cache\huggingface\modules\transformers_modules\local\modeling_chatglm.py", line 973, in forward

layer_ret = layer(

File "C:\python\lib\site-packages\jtorch\nn\__init__.py", line 16, in __call__

return self.forward(*args, **kw)

File "C:\Users\msec/.cache\huggingface\modules\transformers_modules\local\modeling_chatglm.py", line 614, in forward

attention_outputs = self.attention(

File "C:\python\lib\site-packages\jtorch\nn\__init__.py", line 16, in __call__

return self.forward(*args, **kw)

File "C:\Users\msec/.cache\huggingface\modules\transformers_modules\local\modeling_chatglm.py", line 454, in forward

cos, sin = self.rotary_emb(q1, seq_len=position_ids.max() + 1)

File "C:\python\lib\site-packages\jtorch\nn\__init__.py", line 16, in __call__

return self.forward(*args, **kw)

File "C:\Users\msec/.cache\huggingface\modules\transformers_modules\local\modeling_chatglm.py", line 202, in forward

t = torch.arange(seq_len, device=x.device, dtype=self.inv_freq.dtype)

File "C:\python\lib\site-packages\jtorch\__init__.py", line 31, in inner

ret = func(*args, **kw)

File "C:\python\lib\site-packages\jittor\misc.py", line 809, in arange

if isinstance(start, jt.Var): start = start.item()

RuntimeError: [f 0411 19:54:06.552000 92 executor.cc:682]

Execute fused operator(18/43) failed.

[JIT Source]: C:\Users\msec\.cache\jittor\jt1.3.7\cl\py3.10.5\Windows-10-10.x59\IntelRCoreTMi7xff\default\cu11.2.67\jit\__opkey0_broadcast_to__Tx_float16__DIM_3__BCAST_1__opkey1_broadcast_to__Tx_float16__DIM_3____hash_9730a00665a5a466_op.cc

[OP TYPE]: fused_op:( broadcast_to, broadcast_to, binary.multiply, reduce.add,)

[Input]: float16[16384,4096,]transformer.layers.15.mlp.dense_h_to_4h.weight, float16[4,4096,],

[Output]: float16[4,16384,],

[Async Backtrace]: ---

H:\Code\LLMs\JittorLLMs\cli_demo.py:9 <<module>>

H:\Code\LLMs\JittorLLMs\models\chatglm\__init__.py:36 <chat>

C:\Users\msec/.cache\huggingface\modules\transformers_modules\local\modeling_chatglm.py:1259 <stream_chat>

C:\Users\msec/.cache\huggingface\modules\transformers_modules\local\modeling_chatglm.py:1336 <stream_generate>

C:\python\lib\site-packages\jtorch\nn\__init__.py:16 <__call__>

C:\Users\msec/.cache\huggingface\modules\transformers_modules\local\modeling_chatglm.py:1138 <forward>

C:\python\lib\site-packages\jtorch\nn\__init__.py:16 <__call__>

C:\Users\msec/.cache\huggingface\modules\transformers_modules\local\modeling_chatglm.py:973 <forward>

C:\python\lib\site-packages\jtorch\nn\__init__.py:16 <__call__>

C:\Users\msec/.cache\huggingface\modules\transformers_modules\local\modeling_chatglm.py:635 <forward>

C:\python\lib\site-packages\jtorch\nn\__init__.py:16 <__call__>

C:\Users\msec/.cache\huggingface\modules\transformers_modules\local\modeling_chatglm.py:535 <forward>

C:\python\lib\site-packages\jtorch\__init__.py:126 <__call__>

C:\python\lib\site-packages\jittor\nn.py:638 <execute>

C:\python\lib\site-packages\jittor\nn.py:34 <matmul_transpose>

C:\python\lib\site-packages\jittor\nn.py:42 <matmul_transpose>

[Reason]: [f 0411 19:54:06.422000 92 helper_cuda.h:128] CUDA error at c:\python\lib\site-packages\jittor\src\mem\allocator\cuda_device_allocator.cc:33 code=2( cudaErrorMemoryAllocation ) cudaMallocManaged(&ptr, size)

有大佬帮忙能看看是什么问题嘛?